The idea of Big Data is awesome and the technology stack behind it is simply cool. Big Data will stay and it will change the business. Then again, there are a lot of superstitious expectations and conceptions in the hype of Big Data. Big Data can be a trap, a key to stagnation and inability to decide.

Seeing Big Data as an extension of sensory system is simply the wrong analogy: Multiple people talking at the same time, won’t convey more information. Enormous source of information alone, won’t make you wiser. A lot of voices and a lot of data data means noise and chaos. You need only little data, i.e. “Small Data” to make sense of the surrounding complexity, and only after that you can use Big Data effectively.

The amount of difference voices does not increase knowledge. Too much information at the same time makes you most likely inefficient and slow.

Less is more. In the ideal situation, there is minimum amount of information to see the pattern but no less. Too much data is almost as bad as too little: both makes you dull and confused but because of opposite reasons.

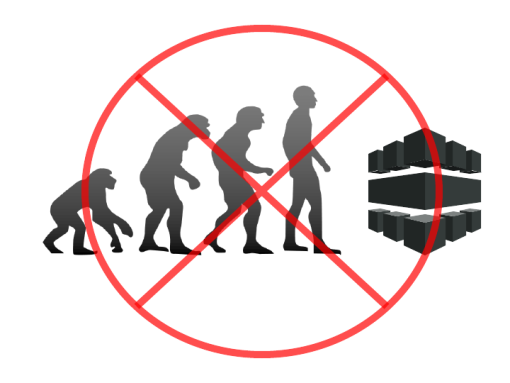

Don’t degenerate into the level of supercomputers!

In a highly complex environment, people’s wit and cunningness outperform even the most sophisticated data models and algorithms. The reason is not that human beings are good at processing large amount of data, but because we are ridiculously good with using Small Data to understand complexity and work with it.

For human beings, it’s trivial task to walk in the woods. It more than easy to go around a tree that’s on your way. It’s not a trivial task for a machine. A machine simply doesn’t know what is relevant and what is not, and thus it need to take everything into account. Actually, the smartest algorithms like neural networks allows a machine to efficiently ignore large portion of data and thus, find a globally optimal solution much faster.

A supercomputer is good with Big Data and terribly bad with Small Data. For human beings the opposite is true.

A problem in the Big Data mindset is attempt to take everything into account. If you took every little detail into account that would makes you slow, inflexible and uncreative. There is absolutely no reason to utilize big amount of data, if you can do better with small amount of data.

This doesn’t mean that you shouldn’t utilize Big Data. Of course, you can and you should, but Big Data does not help if you don’t understand clearly what is relevant and important right now. That’s where you need Small Data and constant experimentation. You should not degenerate into the level of supercomputers. Rather, use your strengths as a human being.

What is Small Data?

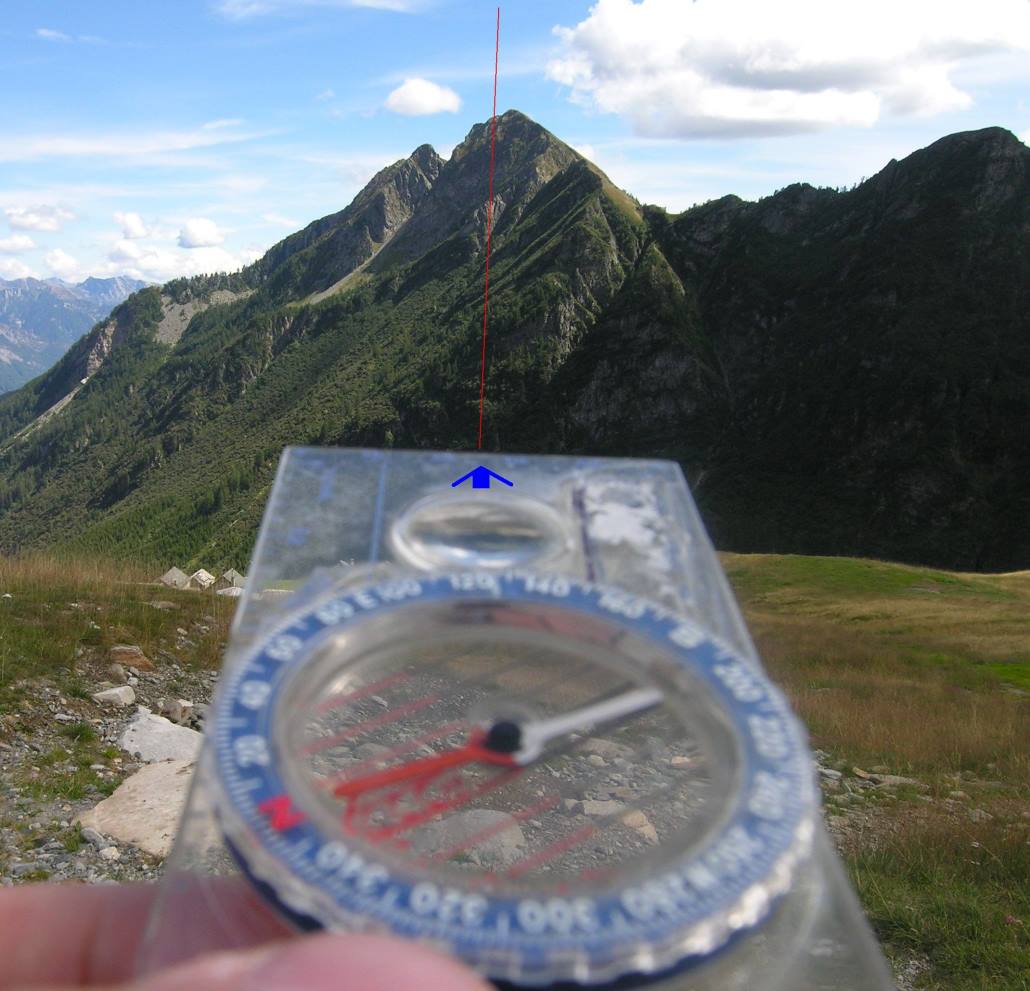

Without landmarks and the direction of north (Small Data), the map - as a metaphor for Big Data - can be uterly useless. Usually, without Small Data Big Data is crap.

By definition, Small Data is simple and contains only at most hundreds of nuggets of data. It is easy to understand as a whole even if the data itself is rather complex. Small Data takes seriously the limits of our working memory. You simply cannot keep multiple variables and metrics in your mind at the same time. That is especially hard if you don’t understand well the system the data represents.

Small Data does not represent the surrounding system, but your direction within the system instead. As an analogue, in orienteering, the map is Big Data. Landmarks, compass and visuals of a tree you need to go around are Small Data. You can find from a place to another without map. Map helps but it’s not necessary. There are studies stating that in big cities people use landmarks as the primary way to find from a place to another, not map - Small Data works far more efficiently than big data. It’s faster to use and easier to remember when needed.

Using Small Data

The key questions Small Data has answers for are:

1) How do I know what I can and cannot control? A lot of money is wasted on metrics and analytics of things that are too unpredictable to control. That being the case it’s better to figure out how to adapt when things change.

Validation of success - is usually rather obvious. It’s more important to identify early if things are not going to work well enough.

2) How can I notice that I was wrong before it is too late? It is not important to validate if you were right. In that case things just work. That a strong validation for your ideas. It is more than crucial to identify early if you are wrong. Playing safe means painfully slow learning, the opposite will harm you, if you don’t mitigate possible bad decisions early.

3) What should I do next? Just like any metrics and analytics, the collected Small Data should be actionable. This means the data should help you decide what actions you need to take next. You should avoid vanity metrics that only tell if things are well or bad, but give you no idea what to do about it.

Far too often BI (business intelligence) dashboards contain a lot of data intended to prove the correctness of your strategy and only little or no data that could falsify your approach and propose a change of direction. The scientific approach suggests a very different approach: Collect a lot of data that can falsify your theory and only little data that is intended to validate your intuition.

In practice, you need to figure out what is most important right now and select only one metric that matters as a primary compass toward the shared purposes (see Antti’s entry on the importance of shared purpose). Then you should start doing small experiments with a clear hypothesis. The place for Big Data is in the small experiments. Big Data should be used to better understand the complex system you work with, not to set direction for the business. Don’t let Big Data make your sharp mind dull!